- Register

- Log in to Tune-In

- Wishlist (0)

-

Shopping cart

(0)

You have no items in your shopping cart.

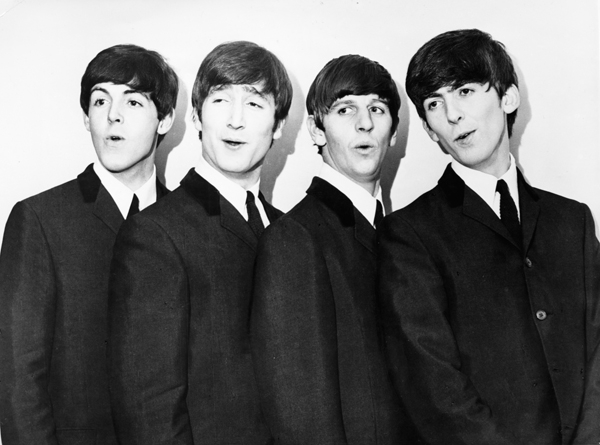

Artificial intelligence identifies the musical progression of the Beatles

SOUTHFIELD, Mich., July 24, 2014 /PRNewswire-USNewswire/ -- Music fans and critics know that the music of the Beatles underwent a dramatic transformation in just a few years, but until now there hasn't been a scientific way to measure the progression. That could change now that computer scientists at Lawrence Technological University have developed an artificial intelligence algorithm that can analyze and compare musical styles, enabling research into the musical progression of the Beatles. Assistant Professor Lior Shamir and graduate student Joe George had previously developed audio analysis technology to study the vocal communication of whales, and they expanded the algorithm to analyze the albums of the Beatles and other well-known bands such as Queen, U2, ABBA and Tears for Fears. The study, published in the August issue of the journal Pattern Recognition Letters, demonstrates scientifically that the structure of the Beatles music changes from one album to the next.

SOUTHFIELD, Mich., July 24, 2014 /PRNewswire-USNewswire/ -- Music fans and critics know that the music of the Beatles underwent a dramatic transformation in just a few years, but until now there hasn't been a scientific way to measure the progression. That could change now that computer scientists at Lawrence Technological University have developed an artificial intelligence algorithm that can analyze and compare musical styles, enabling research into the musical progression of the Beatles. Assistant Professor Lior Shamir and graduate student Joe George had previously developed audio analysis technology to study the vocal communication of whales, and they expanded the algorithm to analyze the albums of the Beatles and other well-known bands such as Queen, U2, ABBA and Tears for Fears. The study, published in the August issue of the journal Pattern Recognition Letters, demonstrates scientifically that the structure of the Beatles music changes from one album to the next.

The algorithm works by first converting each song to a spectrogram – a visual representation of the audio content. That turns an audio analysis task into an image analysis problem, which is solved by applying comprehensive algorithms that turn each music spectrogram into a set of almost 3,000 numeric descriptors reflecting visual aspects such as textures, shapes and the statistical distribution of the pixels. Pattern recognition and statistical methods are then used to detect and quantify the similarities between different pieces of music.

Read more on this story....

Source: PR Newswire